Thoughts on Generalization in Diffusion Models

Generalization is the implicit alignment between neural networks and the underlying data distribution, shaped by both data and human perception. In diffusion models, this means generating novel, meaningful samples rather than reproducing training examples.

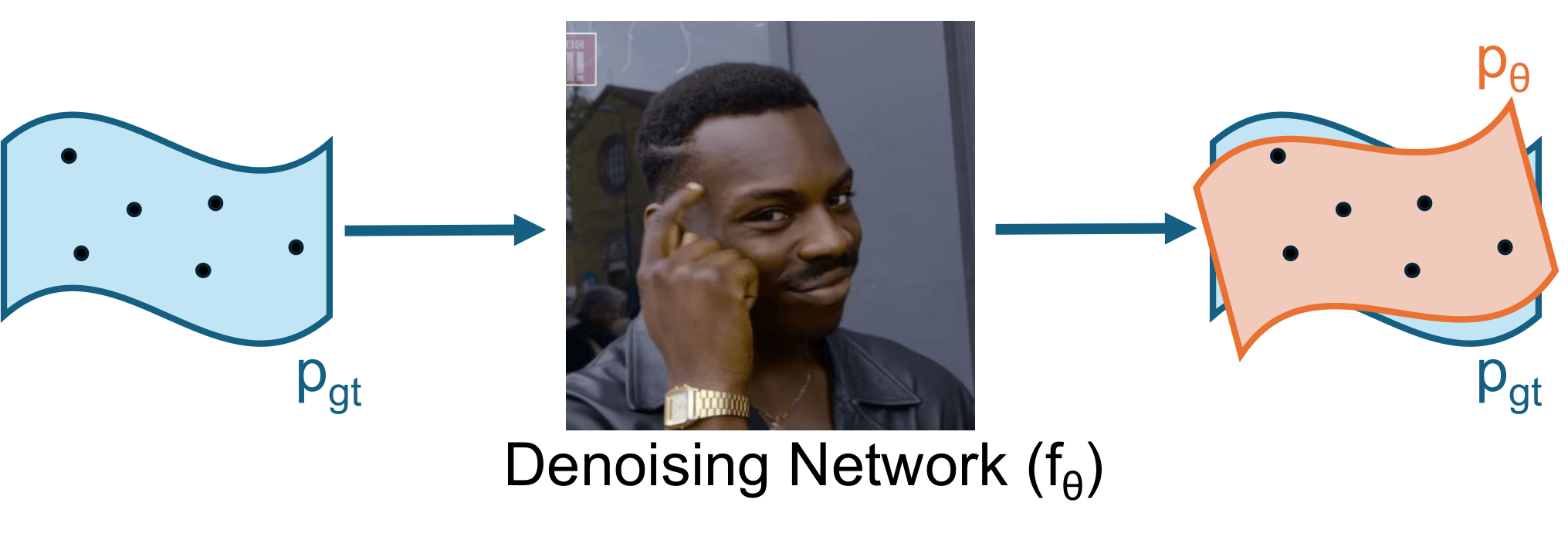

However, it is not trivial that networks, among all possible solutions that fit the training data, automatically choose solutions that also fit unseen data well and thus generate new samples.

In our ICLR 2026 paper, we show that this ability is not just because networks are powerful and can approximate any function. It is more tightly connected to their ability to extract and leverage structure from training data, and to learn balanced, informative representations that organize data and adapt to complex distributions.

Read more in the official blog and the slides.