The (non-)explainability of deep learning

One reason deep learning performs so well in large-data regimes, often surpassing both humans and earlier fully explainable ML methods, is its non-explainability.

Earlier ML models were largely human-crafted. We made explicit assumptions and designed optimal solutions under those assumptions. This made them constrained by what humans could clearly define. In other words, what humans did not understand, these models usually could not capture either. (The upside is that when these models fail, we often know why: assumptions are violated by real data.)

Deep learning is different. We build a high-capacity structure without specifying exact functions of each layer, then let data and optimization shape its behavior. These models can exceed human performance by discovering patterns that are difficult to articulate, and by learning robust rules automatically. They can also make severe mistakes.

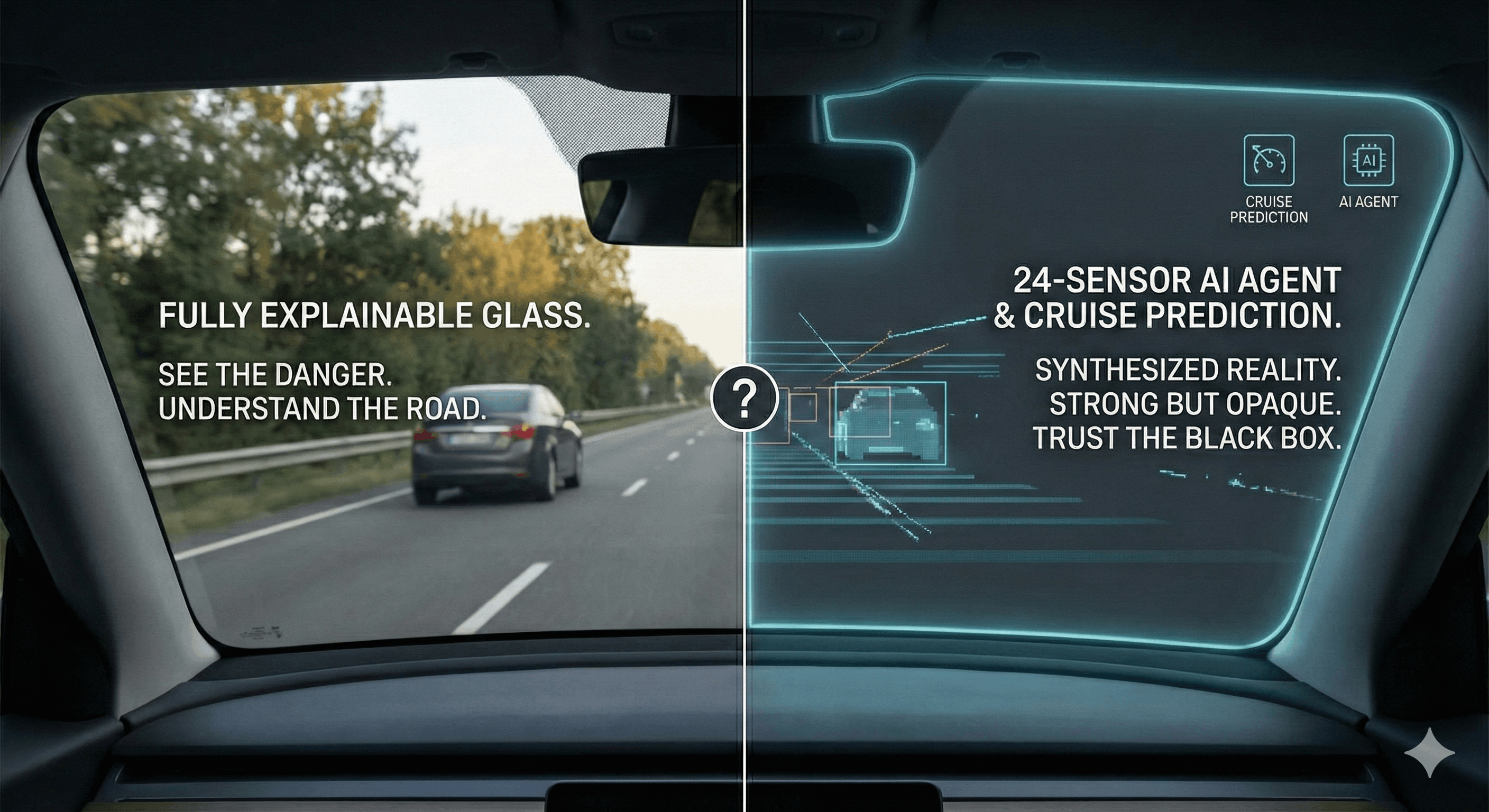

The trade-off is bittersweet: we hand over control to complex systems and rely on mechanisms we only partially understand. The question is not full explainability versus zero explainability, but how to build reliable, testable abstractions that are useful for both science and deployment.